Running Roboflow Inference

This guide explains how to run AI-based image inference on ALPON X4 using Roboflow.

This guide provides a clear and straightforward explanation of how to leverage Roboflow's cloud-based inference on ALPON devices. By following the steps outlined in this document, you will learn how to set up a Roboflow workflow, prepare a Docker container, and deploy your application to an ALPON X4 device via the ALPON Cloud. This method is ideal for running AI-based image analysis tasks without requiring local processing power on the device itself.

.

1. Cloud Inference with Roboflow API

Cloud inference is the simplest way to use Roboflow on ALPON devices. Images are sent to the Roboflow API, and results are returned over the network. This requires stable cellular or Wi-Fi connectivity (Sixfab SIM cards provide global coverage in 180+ countries).

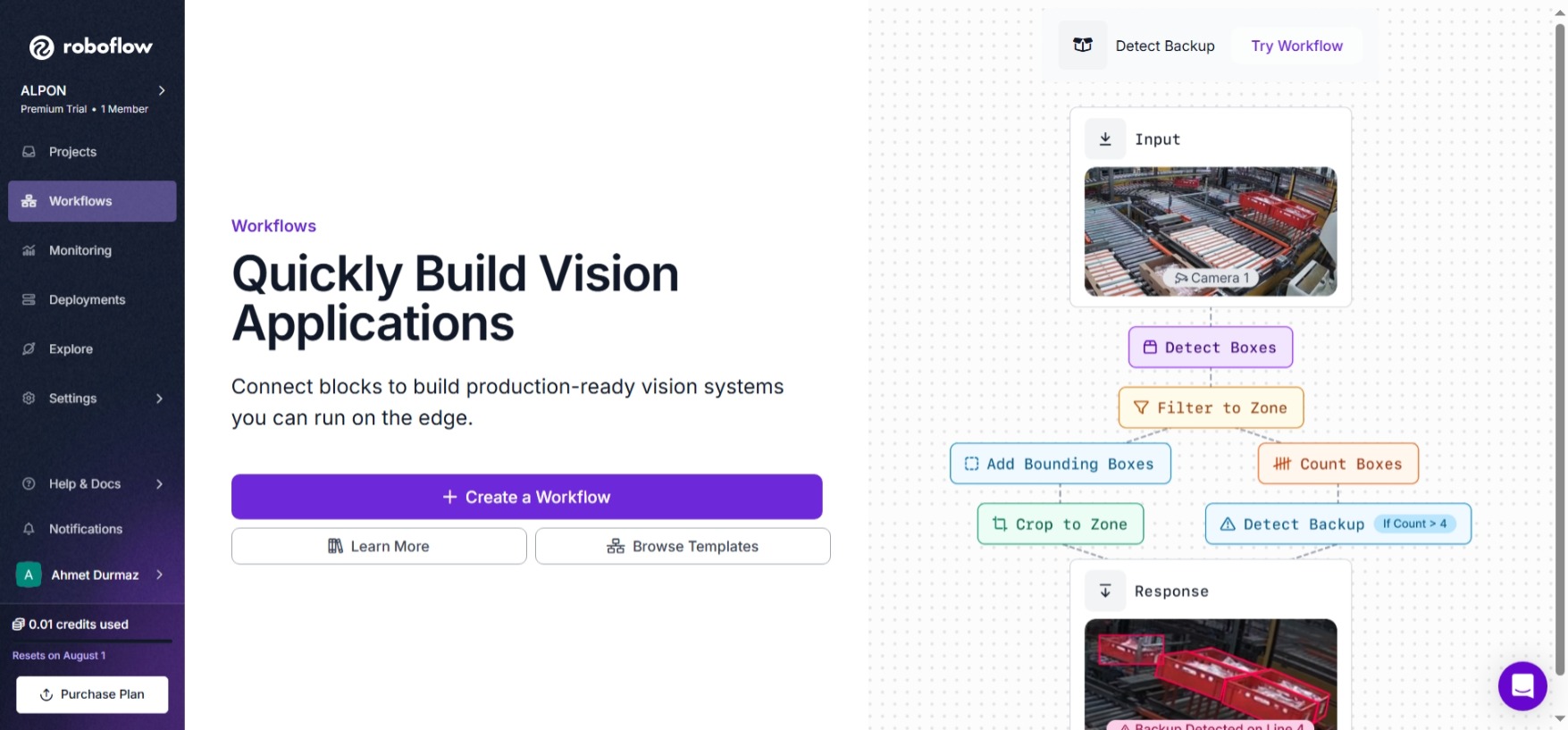

1.1 Create a Workflow in Roboflow

- Go to https://app.roboflow.com and sign up or log in.

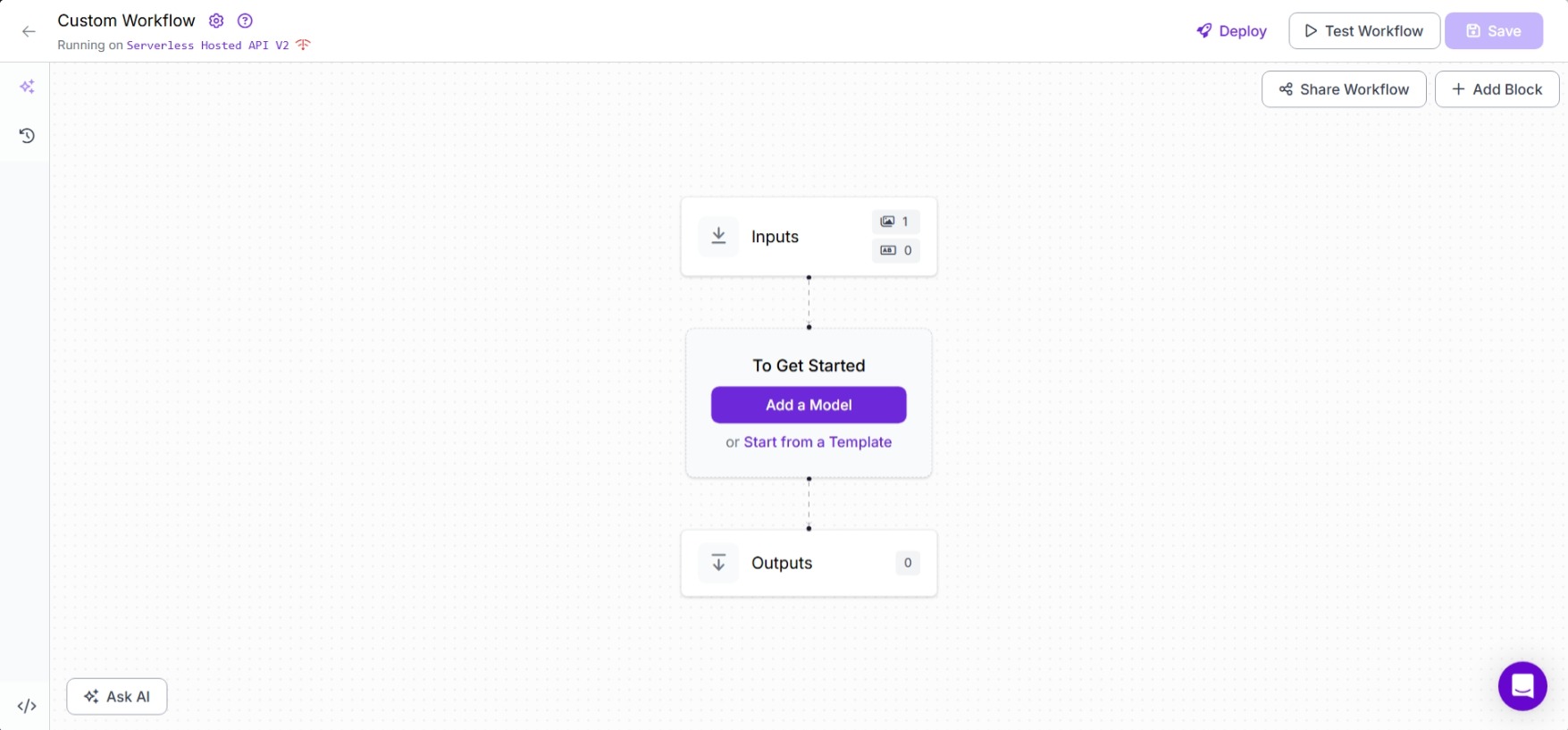

- In the left menu, select Workflows → Create a Workflow.

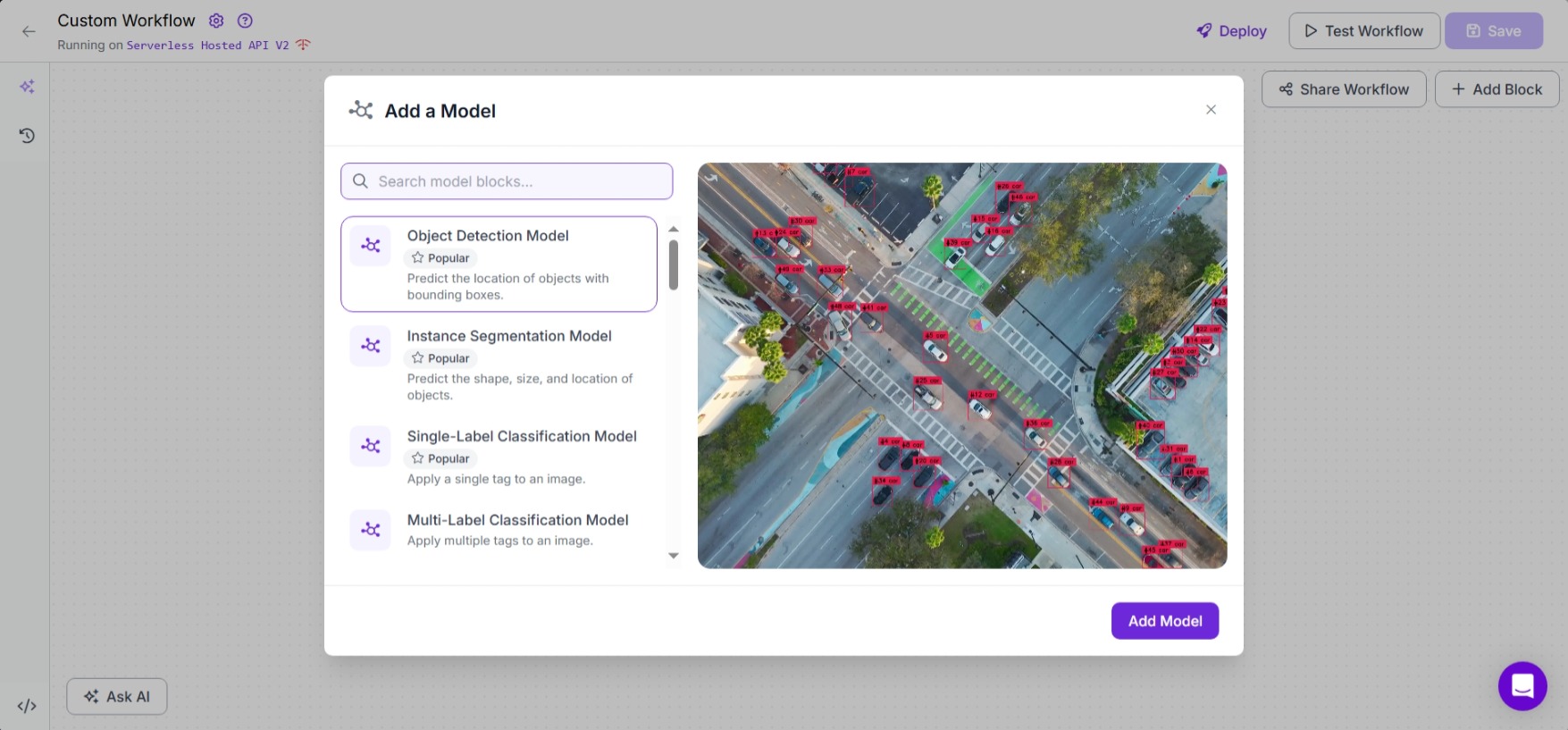

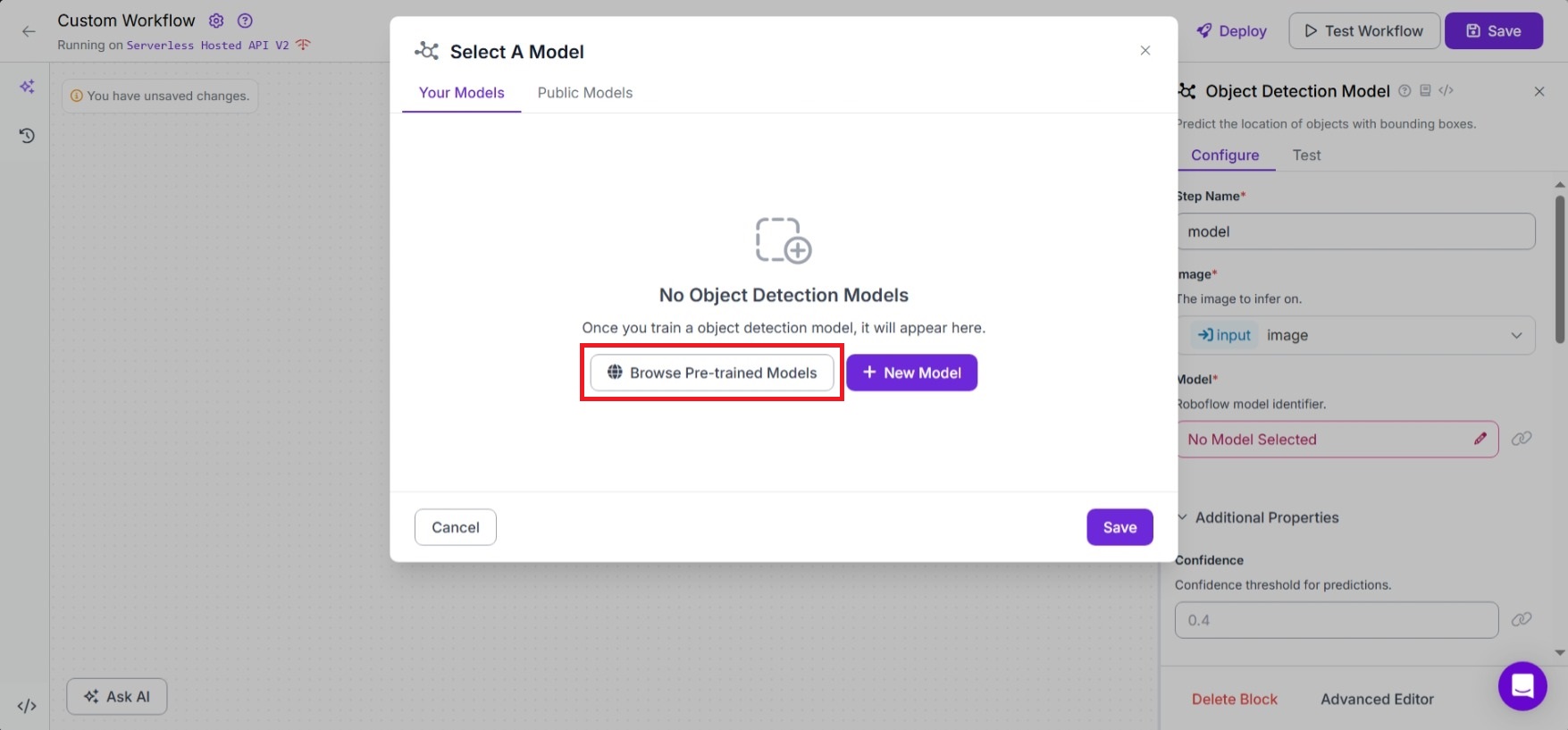

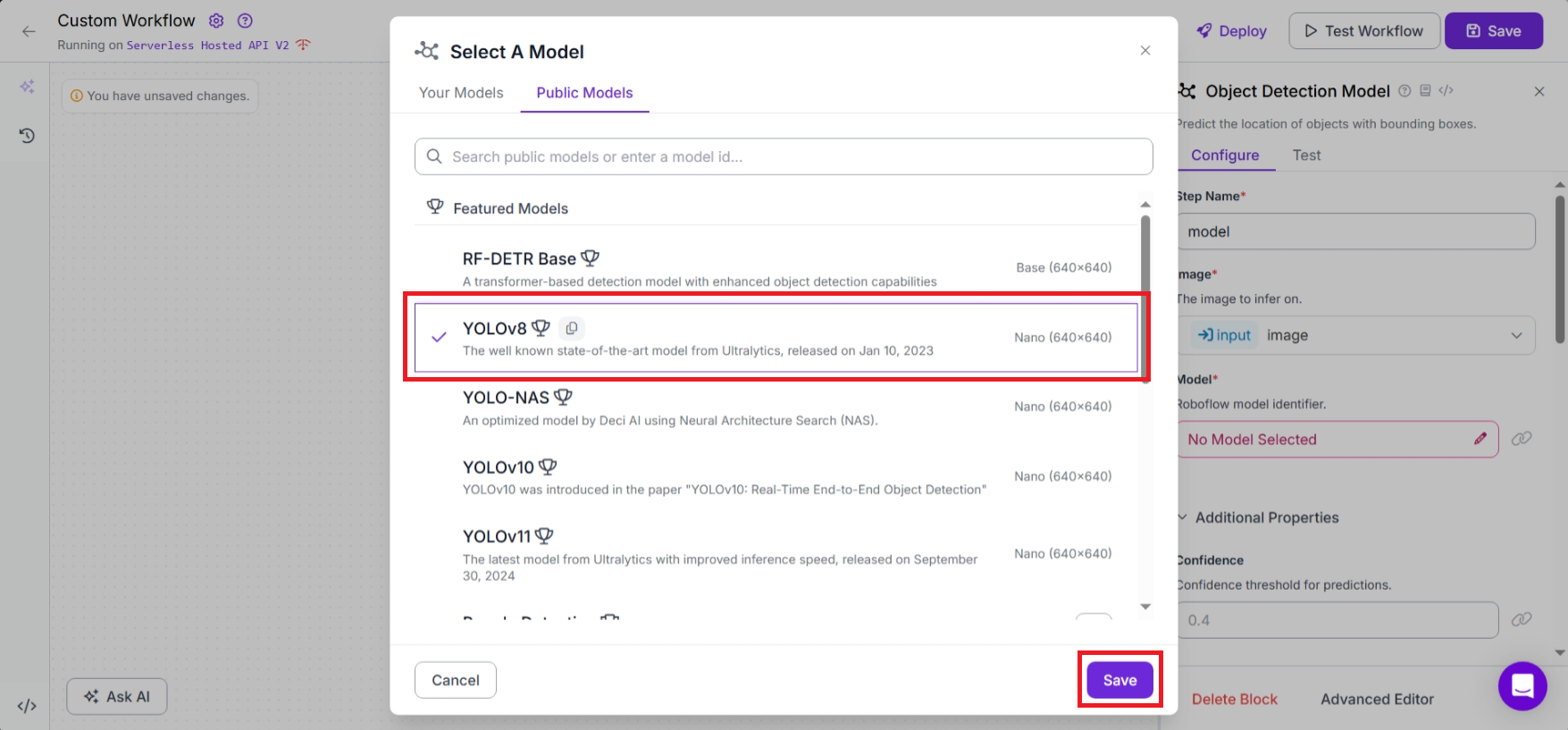

- Add a model by clicking Add a Model → Select Object Detection model.

- Add a model by clicking Browse Pre-trained Models → Select YOLOv8.

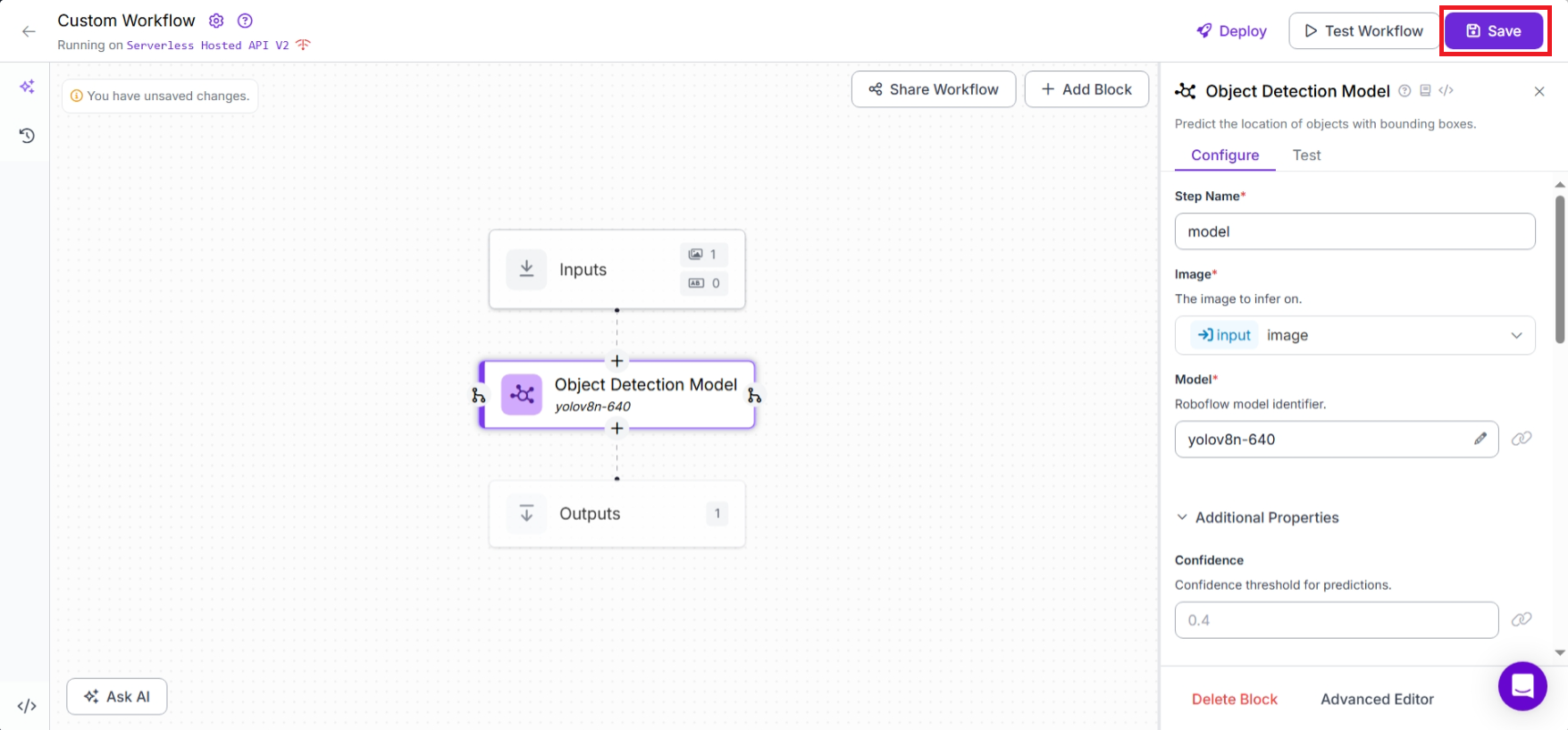

- Save the workflow.

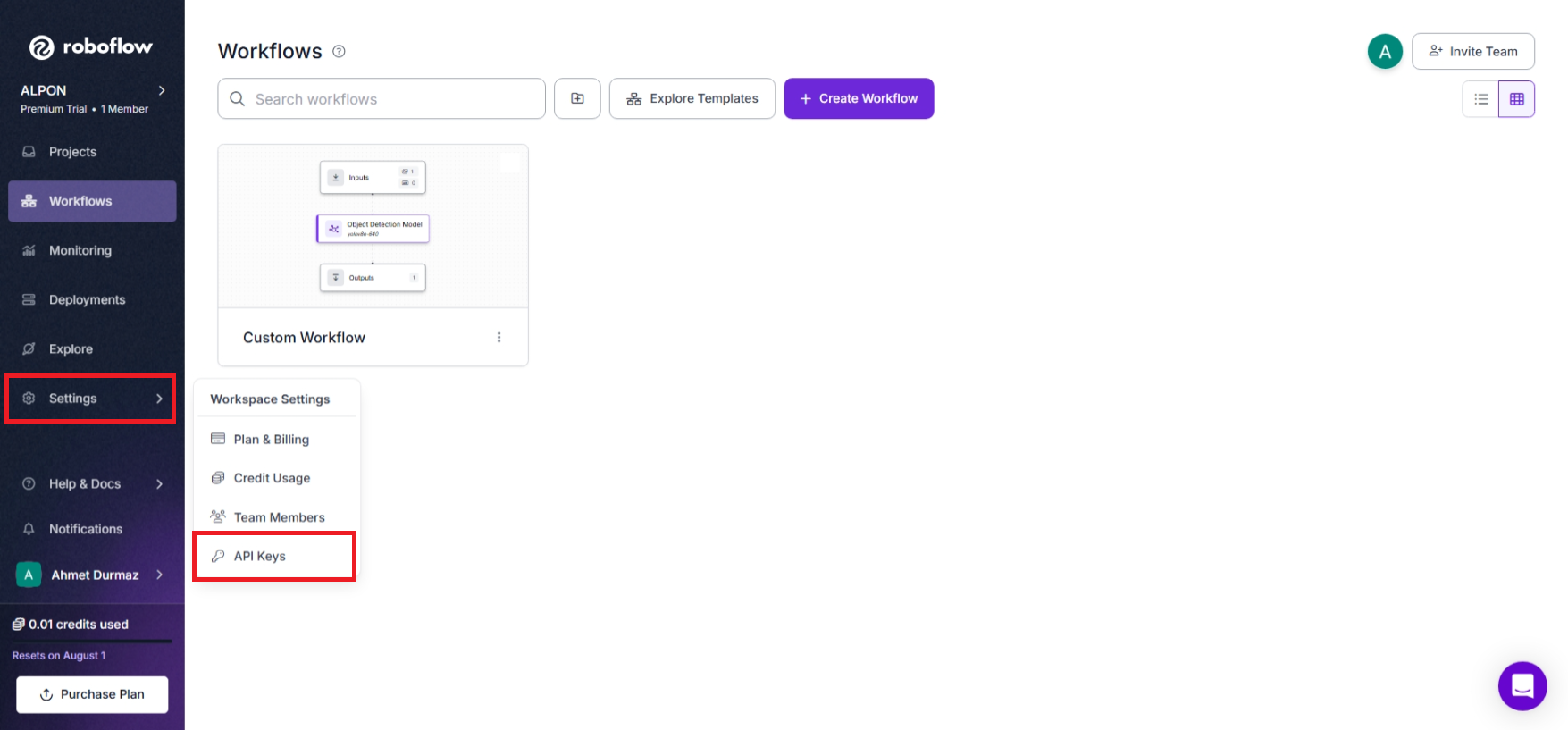

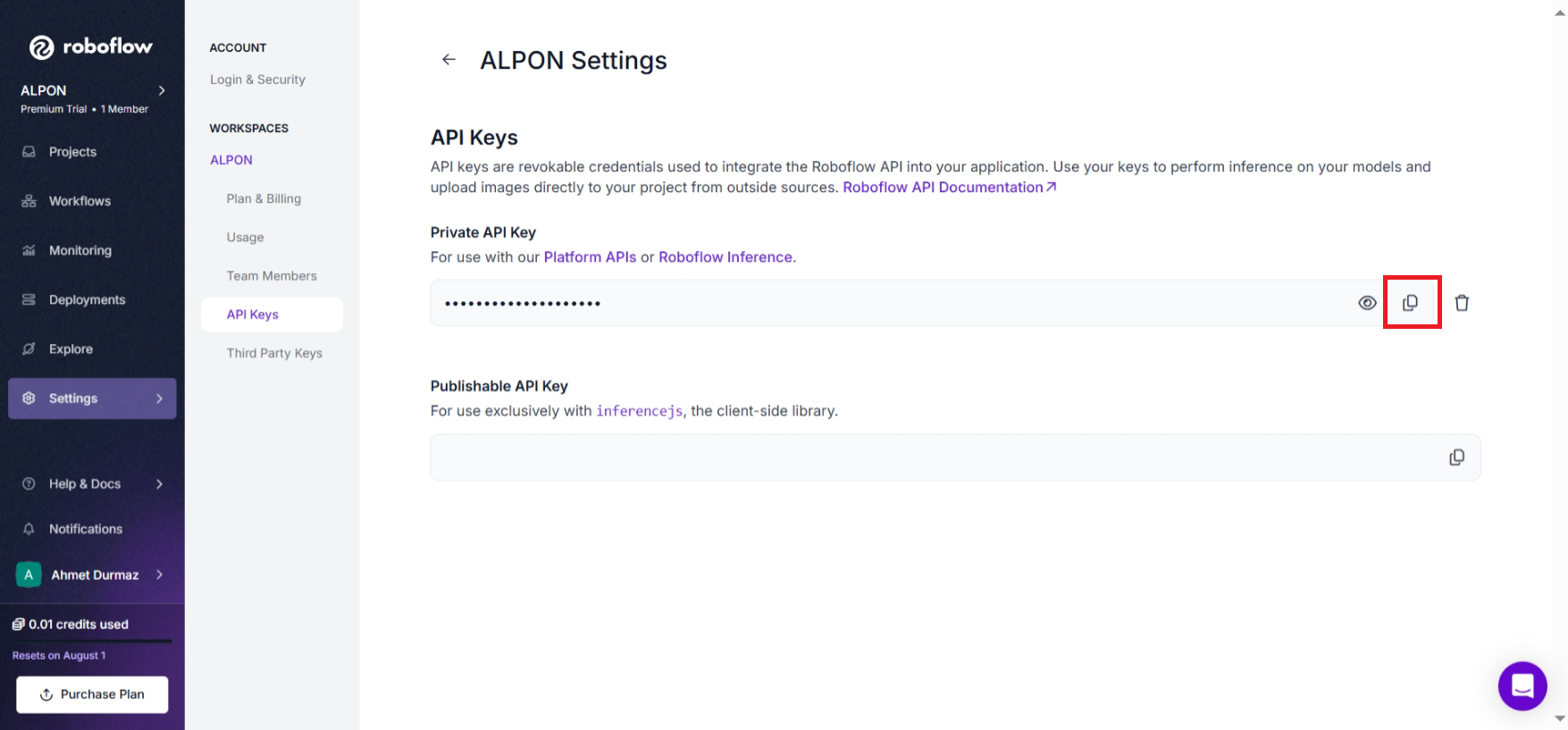

- Go to Settings → API Keys and copy your API key.

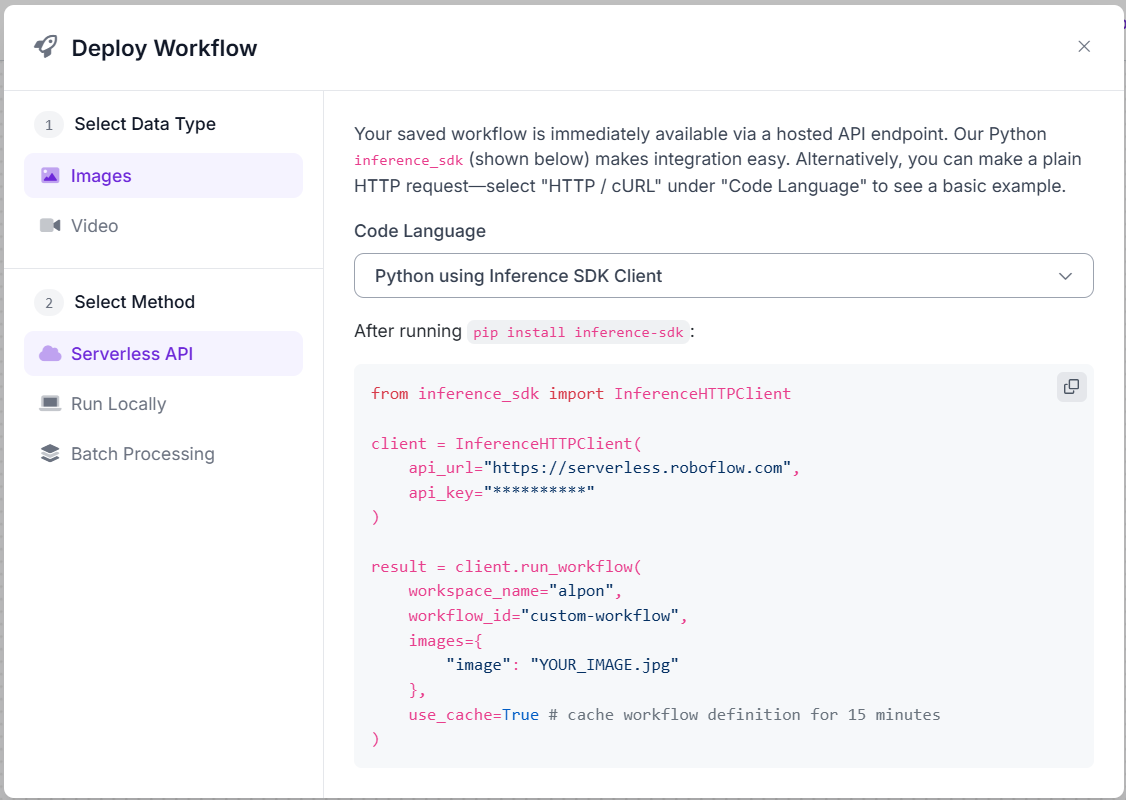

- In the workflow view, click Deploy to see the required environment variables. You’ll need them later.

1.2 Deploy on ALPON Cloud

You can package your Roboflow app into a Docker container and deploy it to your ALPON device using ALPON Cloud.

Example Dockerfile:

FROM python:3.11-slim

WORKDIR /app

RUN pip install --no-cache-dir inference-sdk

COPY main.py .

CMD ["python", "main.py"]Example main.py:

# main.py

import os

import time

from inference_sdk import InferenceHTTPClient

API_URL = os.getenv("ROBOFLOW_API_URL", "https://serverless.roboflow.com")

API_KEY = os.getenv("ROBOFLOW_API_KEY")

WORKSPACE_NAME = os.getenv("ROBOFLOW_WORKSPACE")

WORKFLOW_ID = os.getenv("ROBOFLOW_WORKFLOW_ID")

IMAGE_PATH = os.getenv("IMAGE_PATH", "/data/image.jpg")

if not all([API_KEY, WORKSPACE_NAME, WORKFLOW_ID]):

raise EnvironmentError("Missing required environment variables.")

client = InferenceHTTPClient(api_url=API_URL, api_key=API_KEY)

while True:

try:

result = client.run_workflow(

workspace_name=WORKSPACE_NAME,

workflow_id=WORKFLOW_ID,

images={"image": IMAGE_PATH},

use_cache=True

)

print("Inference result:", result)

except Exception as e:

print("Error during inference:", e)

time.sleep(60)Roboflow API supports only image inputs. If you want to use a video or live feed, you must extract frames and send them one by one (edge inference is not available on ALPON X4).

You may also modify the code to use a USB camera via cv2.VideoCapture(), though this is not officially supported in the cloud-based flow.

2. Application Workflow

- The application is dockerized.

- The container is deployed to ALPON via ALPON Cloud.

- The deployed application runs continuously on the device.

3. Deploying Your Roboflow Inference Application on ALPON Cloud

Below are instructions to deploy your Roboflow inference container to ALPON Cloud, ensuring seamless operation on your ALPON device.

3.1 Build Container Image

In the folder containing your Dockerfile and main.py, build the image targeting ARM64 platform (for ALPON X4):

docker buildx build --platform linux/arm64 --load -t roboflow-inference .3.2 Upload Image to ALPON Cloud

- Log in to ALPON Cloud.

- Go to your device’s Registry tab.

- Click "+ Add Container" and upload your roboflow-inference container image.

3.3 Deploy the Container

- Go to the Applications tab of your asset and click Deploy App.

- In the deployment configuration window:

- Container Name: roboflow-inference (or your preferred name)

- Select Image & Tag: Choose the uploaded roboflow-inference image.

- Set Environment Variables: Add the following environment variables with values obtained from your Roboflow workspace and workflow:

| Key | Value | Description |

|---|---|---|

| ROBOFLOW_API_KEY | Your Roboflow API key | API key from Roboflow Settings → API Keys |

| ROBOFLOW_WORKSPACE | Your Roboflow workspace name | From your Roboflow account |

| ROBOFLOW_WORKFLOW_ID | Your Roboflow workflow ID | From your Roboflow Workflow configuration |

| ROBOFLOW_API_URL | Optional, default https://serverless.roboflow.com | Roboflow API base URL (optional) |

| IMAGE_PATH | Path to input image on device | E.g., /data/image.jpg (optional) |

- Click Deploy.

3.4 Deployment Status

- Deployment completed successfully. Your roboflow-inference container is running on the target ALPON X4 device.

- Check the ALPON Cloud Applications tab for real-time status and logs if needed.

Updated 4 months ago